We are always working to make our exhibits better. Your feedback helps us to provide a more fulfilling experience for your fellow patrons. By interacting with this kiosk, you consent to have your contribution used for any and all artistic purposes. The artists find your input invaluable, thank you.

Inspired by the feedback kiosks sometimes found in public restrooms, “We value your feedback.” is a speculative design piece that is meant to be both appealing and appalling.

A kiosk sits in front of a nondescript wall. A brightly lit sign reading “How do you feel?” and four large, colorful, blinking buttons draw the visitor in. Each eminently pressable button is labeled with a different emoji: a happy face, a neutral face, a slightly unhappy face, and an angry face.

When a visitor presses one of the buttons, the kiosk uses its lights to acknowledge their contribution, but there is no other visible result. Invisibly, they are being surveilled by a hidden camera as they approach and interact with the kiosk. At the moment the visitor presses a button, the kiosk takes a picture of their face. This picture is sent over the internet to a facial analysis service developed and managed by the artist exclusively for this piece. The service is powered by DeepFace, an open-source software library developed by Facebook’s AI Research department that claims to determine someone’s age, gender, race, and current emotion from their photograph. As improbable (and problematic) as this sounds, this is the type of analysis that is routinely performed on images uploaded to social media. But unlike social media sites, this service discards the photo and sends the analysis back to the kiosk.

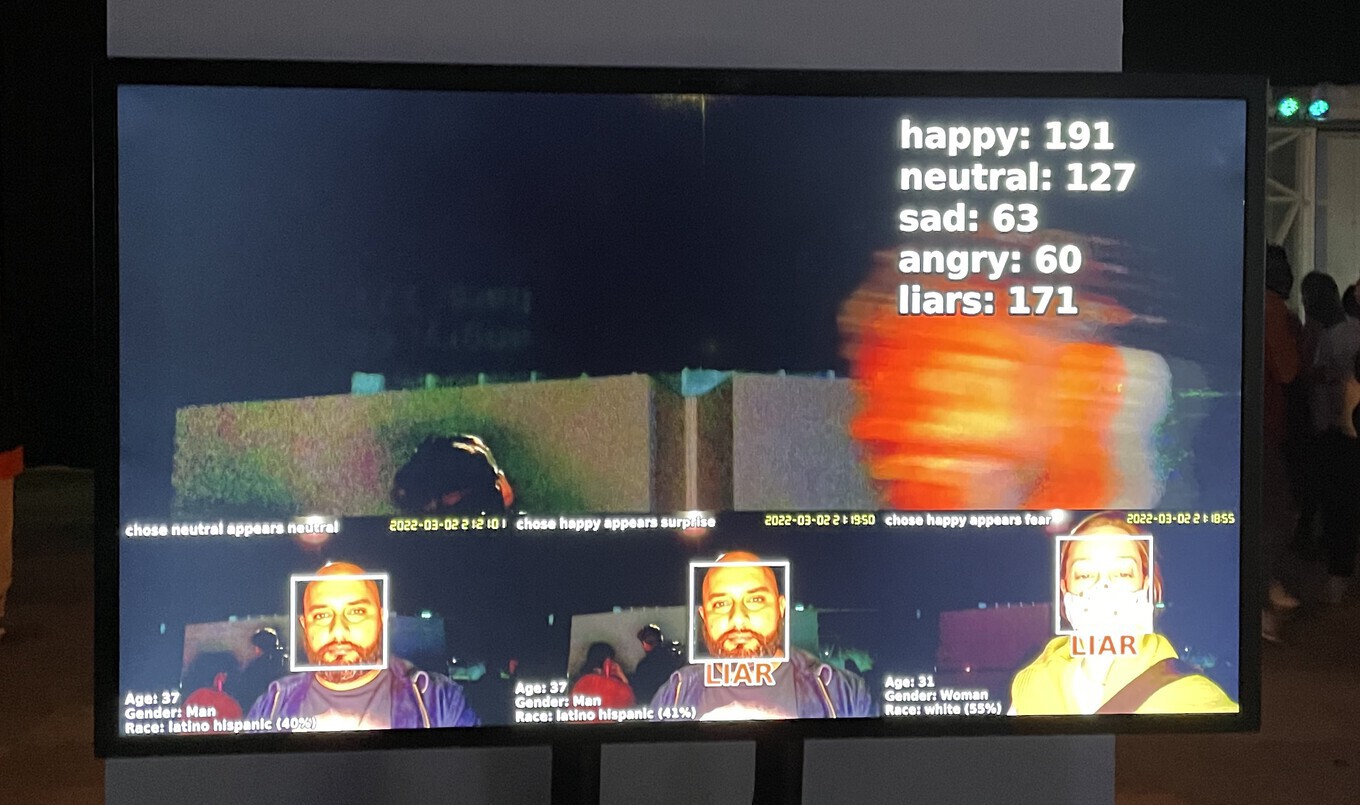

Meanwhile, unseen from the front of the kiosk, the photo of the button presser’s face appears on a large screen on the opposite side of the wall. When their facial analysis is complete, the results are displayed on top of their photo. Their stated emotion (based on their button press) is compared to their “actual” emotion (as determined by the AI). If the two emotions don’t match, the button presser is clearly a liar, and will be labeled as such.

When no one has pressed the button recently, the live video feed from the hidden camera is shown at the top of the screen, allowing viewers to secretly monitor their fellow patrons as they approach the kiosk. At the bottom of the screen, viewers can see the photos of the three most recent button pressers, along with their facial analyses. The kiosk also collects aggregate statistics, which are shown next to the live video feed: how many people pressed each of the four buttons, and how many of them were liars.

The piece is meant to invite visitors to become voyeurs, hiding behind the wall and watching as others approach. A few well-placed chairs encourage this behavior.

Far more intriguing is what happens when button-pressing visitors discover their image on the other side of the wall. Some are horrified, but many are excited — it’s a new game to play! They proceed to try to “look” their age and gender, or exaggerate their facial expression so that the AI reads it “correctly.”

“We value your feedback.” lays bare our reflexive need to assign labels to ourselves and others, even to the point that we are teaching computers how to do it. Upon reflection, we are also forced to reckon with the power that labels wield over us — even the most rudimentary AI can use them to control our behavior.